Excerpted from a Littler Mendelson PC Blog by Ivie A. Serioux and Jerry Zhang

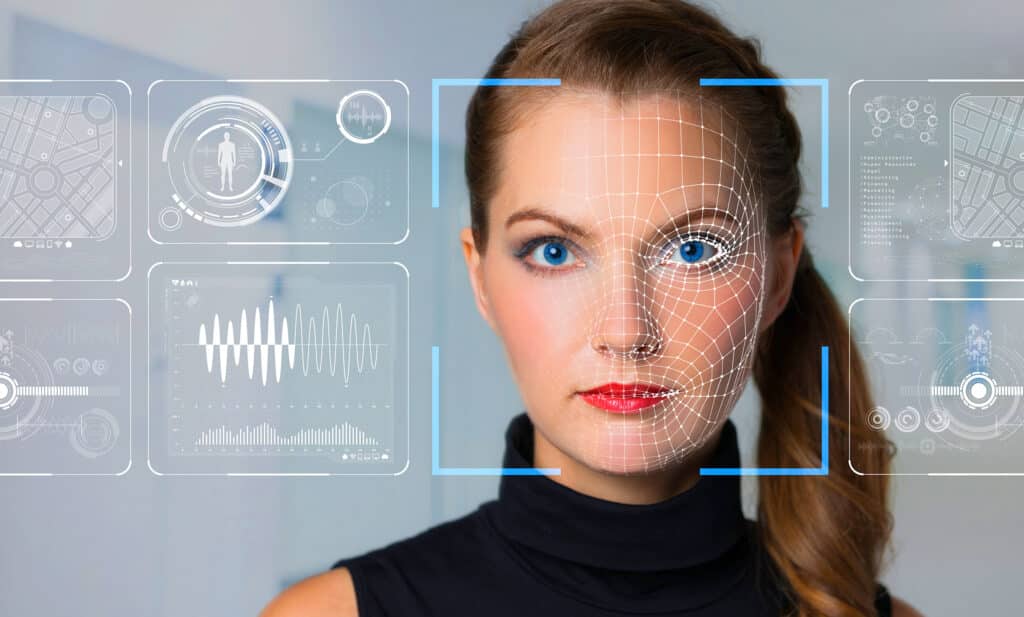

The landscape of workplace harassment has evolved beyond physical offices, after-hours texts and off-site events. Employers now face a sophisticated and deeply unsettling threat: deepfake technology. Once the domain of tech experts, AI-powered tools that generate hyper-realistic but fabricated videos, images, and audio are now widely accessible — even to those with minimal technical skills.

As of 2023, 96% of deepfakes were sexually explicit, overwhelmingly targeting women without their consent. By 2024, nearly 100,000 explicit deepfake images and videos were being circulated daily across more than 9,500 websites. Alarmingly, a significant portion of these featured underage individuals.

While image-based sexual abuse is not new, AI has dramatically amplified its scale and impact. In the workplace, deepfakes can be weaponized to harass, intimidate, retaliate, or destroy reputations—often with limited recourse under traditional employment policies.

For HR leaders, legal counsel, and executives, the question is no longer if deepfakes will affect your workforce but when, and how prepared your organization is to respond.

The Rise of Deepfakes in the Workplace

Deepfakes are synthetic media, i.e., content created or manipulated using machine learning, particularly deep learning models trained on large datasets of images, voices, or videos, in an effort to create false (and typically malicious) information. With minimal effort, bad actors can now impersonate coworkers, executives, or clients—making deepfakes a potent tool for fraud, impersonation, and harassment.

Employers are increasingly encountering:

- fake explicit videos falsely attributed to employees;

- voice deepfakes used to send inappropriate messages; and

- manipulated recordings simulating insubordination or offensive conduct.

These incidents cause severe reputational and psychological harm to victims and place employers in a difficult position regarding credibility determinations — especially when often relying on outdated policies and investigative procedures.

Evolving Legal Framework

While federal law has yet to catch up, there are still existing sources of litigation that employers should keep in mind:

- Employers may be liable under Title VII if deepfakes affect workplace dynamics—even if created off-hours as it can lead to a hostile work environment claim.

- Failure to act on known or reasonably foreseeable deepfake harassment may also expose employers to negligent supervision or retention claims.

Emerging Federal/State Laws and Initiatives

- The federal TAKE IT DOWN Act, 47 U.S. Code § 223(h) (signed May 19, 2025): This bipartisan law provides a streamlined process for minors and victims of non-consensual intimate imagery to request removal from online platforms. Platforms must comply within 48 hours or face penalties.3

- Florida’s “Brooke’s Law” (HB 1161) (signed June 10, 2025): Requires platforms to remove non-consensual deepfake content within 48 hours or face civil penalties under Florida’s Deceptive and Unfair Trade Practices Act.4

- The EEOC’s 2024–2028 Strategic Enforcement Plan emphasizes scrutiny of technology-driven discrimination and digital harassment.5

- Proposed changes to Federal Rules of Evidence 901 and the proposed creation of FRE 707 would require parties to authenticate AI-generated evidence and meet expert witness standards for machine outputs, especially in cases involving deepfakes or algorithmic decision-making.

While these laws primarily target content platforms, they signal a growing legislative intolerance for deepfake abuse—especially when it intersects with sexual harassment or reputational harm.

Key Employer Risks and Blind Spots

Employers face several legal and operational vulnerabilities:

- Policy Gaps: Most handbooks don’t address synthetic media or manipulated content.

- Delayed Response: Without clear protocols, investigations may be slow or ineffective.

- Liability Exposure: Employers may face lawsuits from employees or third parties harmed by unaddressed deepfake harassment.

- Reputational Harm: Public exposure of deepfake incidents can erode trust and damage workplace culture.

For the full story, please click here.